Hello,

1/ about spam

About spamassassin, I ran it at both smtp level and local delivery level, and I never noticed an increased cpu load / load average.

About loading spamAssassin user preferences from an SQL database, note that this will NOT look for test rules, only local scores, whitelist_from(s), required_score, and auto_report_threshold. (see http://svn.apache.org/repos/asf/spamassassin/branches/3.0/sql/README)

I would like to also have :

The ability to load users’ auto-whitelists from a SQL database.

The most common use for a system like this would be for users to be

able to have per user auto-whitelists on systems where users may not

have a home directory to store the whitelist DB files.

and

The ability to store users’ bayesian filter data in a SQL database. The most common use for a system like this would be for users to be able to have per user bayesian filter data on systems where users may not have a home directory to store the data.

to use per user basis the sa learn, etc …

Maybe I’ll do a hack for these

As interworx client, you may also think to install razor, dcc, etc… to increase the spam detection

Is the API to create siteworx account include the new spam/bounce message options ?

2/ about multi language

I hope some of interworx clients will create others language files as spanish for example.

Is the API to create siteworx account include the new language option ?

3/ about backup / restore

Great tools.

I know how to create a cron job to automaticly do a full siteworx account backup

But I’d like to do this for all siteworx account.

It could be great to give to the siteworx users the ability to create cron jobs to automaticly do full or partial backups of their accounts.

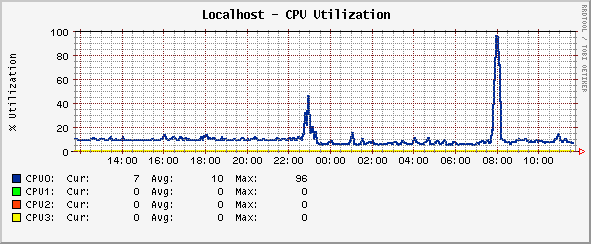

Just be carreful to not backup all your siteworx accounts if you have a lot (more than 20). It increases a lot the CPU load / load average and may cause your server unvailable (I have a PIV 3.0 with 2 Go ram, and my cpu goes up to 80% and load average up to 9.5 - 12 when I did a backup of the 40 siteworx accounts).

Maybe the use of hard link would be more efficients than rsync and gzip ?

4/ About stats

Great, even if it increases a litlle the cpu load (for me it is not really)

Just to be sure, I didn’t have any system/software updates since the Feb 19th is it ok ?

Well great job, I’m very happy as now my clients have siteworx in french

interworx-cp is on the good way

Pascal